Blog

HTTP Services with Asynchronous Request-Reply Pattern in GoPosted by

Manikanth Kosnam on 19 Sep 2023

Building scalable HTTP services is a critical aspect of modern web development. To meet the growing demands of performance and responsiveness, leveraging asynchronous patterns becomes essential. The popular programming language Go provides robust solutions for developing such services.

In this article, we will explore the process of building scalable HTTP services in Go using the asynchronous request-reply pattern. By harnessing Go’s concurrency features, we can create high-performance applications that can handle multiple concurrent requests at scale.

While synchronous request-response interactions are popular in most HTTP services, there are situations where immediate responses are not feasible. Consider an HTTP request that requires substantial processing time, potentially taking a few minutes to complete. In such cases, holding the HTTP connection open for an extended period is impractical and inefficient.

Some architectures tackle this challenge by employing a message broker to decouple the request and response stages. We often achieve this decoupling using the Queue-Based Load Levelling pattern, allowing independent scaling of the client process and back-end API. While this separation offers scalability benefits, it introduces additional complexity when the client necessitates a success notification, as we need to handle this step asynchronously.

In such cases, the asynchronous request-reply pattern emerges as a powerful solution. By adopting this pattern, developers can effectively handle long-running requests without sacrificing performance or introducing unnecessary complexity. This article delves into the process of building such services in Go, showcasing how the asynchronous request-reply pattern can effectively handle time-consuming requests without blocking the server and ensuring a responsive experience for clients.

In the following sections, we will explore the key concepts of the asynchronous request-reply pattern, delve into practical implementation details using Go, and highlight the benefits of employing this pattern in building scalable HTTP services. By embracing Go’s concurrency features, developers can unlock the potential to create robust and efficient applications capable of handling a diverse range of HTTP requests with varying processing times.

How Does Asynchronous Request-Reply Pattern Work?

We can implement this pattern by utilizing HTTP polling so that the client is aware of the request status and can provide a better user experience rather than being unaware of the real-time status. This method proves beneficial in scenarios where client-side code finds it challenging to implement websockets/ callback endpoints or establish long-running connections. Even when callbacks are feasible, the additional libraries and services required can introduce excessive complexity.

- In the context of HTTP polling, the client application initiates a synchronous call to the API, triggering a long-running operation on the back-end. The API promptly responds synchronously, aiming to provide the fastest possible response. It acknowledges the request by returning an HTTP 202 (Accepted) status code, indicating that it has been received and queued. We should validate the request and the intended action before commencing the long-running process and respond with an accepted status.

- The API response includes a status endpoint with a unique request ID that the client can poll periodically to check the status or result of the long-running operation. This approach allows the client to independently poll the status endpoint and retrieve relevant information without keeping the connection open.

- To offload the processing, the API can utilize another component, such as a message queue. The back-end system processes the request asynchronously, and for every successful call to the status endpoint, it returns an HTTP 200 response. While the work is still pending, the status endpoint provides a response indicating that the operation is in progress. Once the work is complete, the status endpoint can either return a response indicating completion or redirect the client to another resource URL. For example, suppose the asynchronous operation creates a new resource. In that case, the status endpoint redirects the client to the URI for that specific resource or the same initial URI with a new param of request where the client gets the response.

By incorporating HTTP polling and leveraging components like message queues, developers can implement an effective asynchronous request-reply pattern that allows for long-running operations, client-driven polling for status updates, and eventual completion notifications.

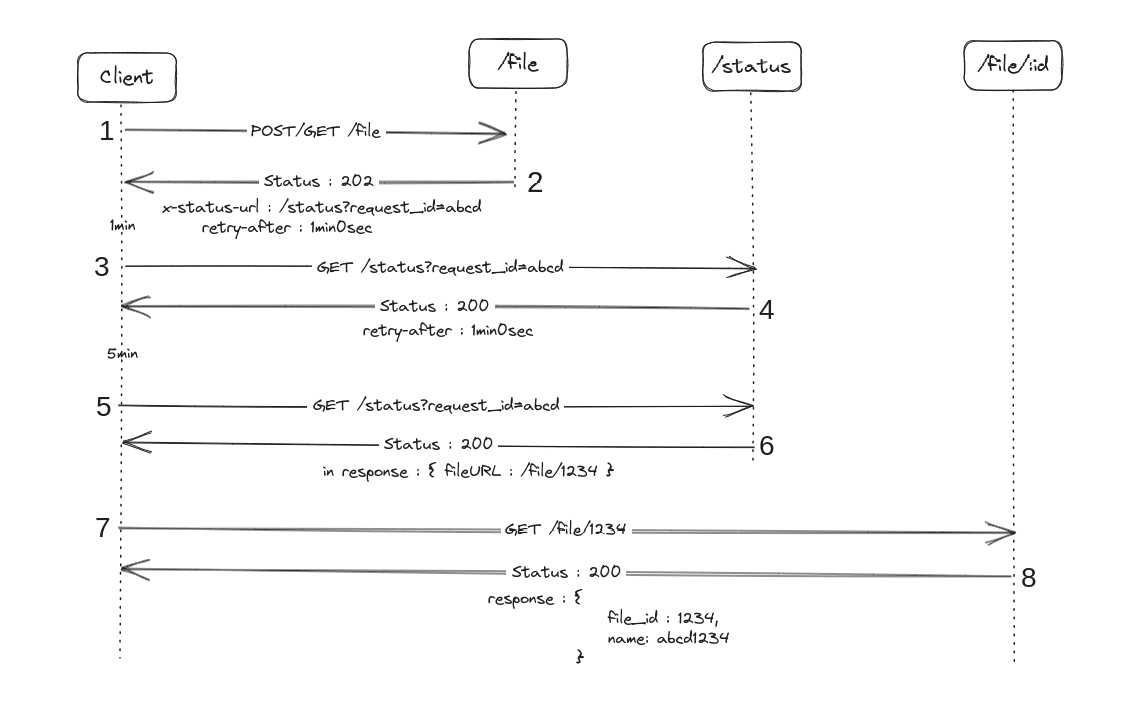

If I take a scenario where processing a file takes time and is done asynchronously, the flow would be like below.

- The client requests for a file.

- The server responds with Status 202(Accepted). It provides the URL in the custom header `X-Status-URL` to check the request status and indicates to check after a minute once processing is triggered asynchronously. If any error happens before that failing validation or similar case, we should immediately return the respective status and error message.

- The client requests on the status endpoint to check the request’s status.

- The server responds with the current status of the request and the time to check the status next.

- The client keeps requesting until the request is processed (ideally suggested to have a timeout).

- When a file is processed and ready, the server replies with the URI of the file resource in response, indicating the client to check the URI for the file. In case of a failure in processing the request, it should return the corresponding error message in the response.

- The client requests the provided URI.

- The server responds with a file and status code 200.

Implementation in Go

First, let’s add a struct representing the status response and a map to store the status of each request by request. The following code would help us with that.

type Status struct {

// The current state of the request

State string `json:"state"`

// The URL to redirect to when the request is complete

FileURL string `json:"fileURL,omitempty"`

}

func storeStatus(requestID string, status Status) {

if requestStatus == nil {

requestStatus = make(map[string]Status)

}

requestStatus[requestID] = status

}

func getStatus(requestID string) (Status, error) {

if v, ok := requestStatus[requestID]; ok {

return v, nil

}

return Status{}, fmt.Errorf("requestID not found \n")

}

func generateRequestID() string {

// Generate a unique request ID using UUIDv4

return uuid.New().String()

}

Now, let’s add a handler that would handle the file request. It accepts the channel so that we can send all the incoming requests, which can be processed asynchronously, on the channel.

func fileHandler(ch chan string) func(http.ResponseWriter, *http.Request) {

return func(w http.ResponseWriter, r *http.Request) {

// Generate a unique request ID using UUIDv4

requestID := generateRequestID()

// Store the request ID and status

storeStatus(requestID, Status{State: "NEW"})

ch <- requestID

// We are setting Retry-After to 1 minute, but it could be any time in the future as per your use case

w.Header().Set("Retry-After", time.Minute.String())

// Set the custom X-Status-URL header to the status URL

w.Header().Set("X-Status-URL", statusURL+requestID)

// Acknowledge the request with an HTTP 202 (Accepted) status code

w.WriteHeader(http.StatusAccepted)

// Write a message to the response body

w.Write([]byte("Request accepted. Processing in progress..\n"))

}

}

Since we have the request status store ready and the handler to accept the request, let’s add an asynchronous processor that accepts the requests from the channel and mark it as completed. Ideally, the code between reading the data from the channel and marking the request as completed will consist of business logic. To simplify, I have added code to make it sleep for 5 minutes.

func processor(ch chan string, wg *sync.WaitGroup) {

defer wg.Done()

for r := range ch {

storeStatus(r, Status{State: "PROCESSING"})

time.Sleep(5 * time.Minute)

storeStatus(r, Status{State: "COMPLETED", FileURL: "http://localhost:8080/file/1"})

}

}

If you stitch the above code, it will look like this. There is additional code not discussed above, like the wait-group, the channel, the constant for the status URL, and the HTTP server.

package main

import (

"fmt"

"github.com/google/uuid"

"net/http"

"sync"

"time"

)

const (

statusURL = "http://localhost:8080/status?requestID="

)

type Status struct {

// The current state of the request

State string `json:"state"`

// The URL to redirect to when the request is complete

FileURL string `json:"fileURL,omitempty"`

}

func storeStatus(requestID string, status Status) {

if requestStatus == nil {

requestStatus = make(map[string]Status)

}

requestStatus[requestID] = status

}

func getStatus(requestID string) (Status, error) {

if v, ok := requestStatus[requestID]; ok {

return v, nil

}

return Status{}, fmt.Errorf("requestID not found \n")

}

func generateRequestID() string {

// Generate a unique request ID using UUIDv4

return uuid.New().String()

}

var requestStatus map[string]Status

func main() {

ch := make(chan string)

wg := &sync.WaitGroup{}

go processor(ch, wg)

http.HandleFunc("/file", fileHandler(ch))

// http.HandleFunc("/status", statusHandler())

err := http.ListenAndServe(":8080", nil)

if err != nil {

fmt.Println(err)

}

close(ch)

wg.Wait()

}

func fileHandler(ch chan string) func(http.ResponseWriter, *http.Request) {

return func(w http.ResponseWriter, r *http.Request) {

// Generate a unique request ID using UUIDv4

requestID := generateRequestID()

// Store the request ID and status

storeStatus(requestID, Status{State: "NEW"})

ch <- requestID

// We are setting Retry-After to 1 minute, but it could be any time in the future as per your use case

w.Header().Set("Retry-After", time.Minute.String())

// Set the X-Status-URL header to the status URL

w.Header().Set("X-Status-URL", statusURL+requestID)

// Acknowledge the request with an HTTP 202 (Accepted) status code

w.WriteHeader(http.StatusAccepted)

// Write a message to the response body

w.Write([]byte("Request accepted. Processing in progress..\n"))

}

}

func processor(ch chan string, wg *sync.WaitGroup) {

defer wg.Done()

for r := range ch {

storeStatus(r, Status{State: "PROCESSING"})

time.Sleep(5 * time.Minute)

storeStatus(r, Status{State: "COMPLETED", FileURL: "http://localhost:8080/file/1"})

}

}

If you compile, run this now, and make a request, it will respond as given below, with a couple of special headers and an acknowledgment message that the request is accepted. Custom Header – X-Status-URL provides the URL for the request status so that the client can poll on this to check it. Header — Retry-After provides the time when the client can check the request status. This time is ideally populated depending on the estimated time of processing the request.

gopherslab:file$ curl -i localhost:8080/file

HTTP/1.1 202 Accepted

X-Status-URL: http://localhost:8080/status?requestID=2f53af6b-71da-496e-8ca9-2afb5df543d9

Retry-After: 1m0s

Date: Fri, 14 Jul 2023 12:22:13 GMT

Content-Length: 43

Content-Type: text/plain; charset=utf-8

Request accepted. Processing in progress..

Requesting the URL provided in Local would not work as we have not added a handler for that request. We can add the below handler to the code and register the same to the server in the main.

func main() {

ch := make(chan string)

wg := &sync.WaitGroup{}

go processor(ch, wg)

http.HandleFunc("/file", fileHandler(ch))

http.HandleFunc("/status", statusHandler())

err := http.ListenAndServe(":8080", nil)

if err != nil {

fmt.Println(err)

}

close(ch)

wg.Wait()

}

func statusHandler() func(http.ResponseWriter, *http.Request) {

return func(w http.ResponseWriter, r *http.Request) {

// Get the request ID from the request URL query string

requestID := r.URL.Query().Get("requestID")

status, err := getStatus(requestID)

if err != nil {

w.WriteHeader(http.StatusNotFound)

w.Write([]byte(err.Error()))

return

}

res, err := json.Marshal(status)

if err != nil {

w.WriteHeader(http.StatusInternalServerError)

w.Write([]byte(err.Error()))

return

}

// Setting retry time for another minute if the status is not completed

if status.State != "COMPLETED" {

w.Header().Set("Retry-After", time.Minute.String())

}

w.WriteHeader(http.StatusOK)

// Write a message to the response body

w.Write(res)

}

}

If you recompile, run, make a request, and check the status on the status URL returned in the custom `X-Status-URL` header, you will see a response like the one below, which states the request is processing and the next poll should be done in a minute.

gopherslab:file$ curl -i http://localhost:8080/status?requestID=2f53af6b-71da-496e-8ca9-2afb5df543d9

HTTP/1.1 200 OK

Retry-After: 1m0s

Date: Fri, 14 Jul 2023 12:22:43 GMT

Content-Length: 22

Content-Type: text/plain; charset=utf-8

{"state":"PROCESSING"}

Upon completion of the request after 5 mins, the status request would respond with below. Provides the status code 200 indicating resource is found and can be accessed at — http://localhost:8080/file/1 (we did not add a handler for it, so it would not work).

gopherslab:file$ curl -i http://localhost:8080/status?requestID=2f53af6b-71da-496e-8ca9-2afb5df543d9

HTTP/1.1 200 OK

Date: Fri, 14 Jul 2023 12:27:14 GMT

Content-Length: 66

Content-Type: text/plain; charset=utf-8

{"state":"COMPLETED","fileURL":"http://localhost:8080/file/1"}

So, with the above implementation, even though the work was done asynchronously in a Go routine, we could provide the current status of the request without the client having to maintain a persistent HTTP connection and could give an accurate response upon completion without wasting too many resources.

Conclusion

In conclusion, Go and the asynchronous request-reply pattern provide a powerful combination for building scalable HTTP services. Go’s concurrency features allow developers to create high-performance applications capable of handling multiple requests simultaneously, ensuring responsiveness and meeting the growing demands of modern web development.

The asynchronous request-reply pattern offers a solution for handling long-running requests without sacrificing performance or introducing unnecessary complexity. By decoupling the request and response stages and leveraging techniques like HTTP polling, developers can provide a better user experience by keeping clients informed about the status of their requests. This pattern allows for independent scalability of client processes and back-end APIs while enabling success and eventual completion notifications.

Gophers Lab is a Digital Engineering company that leverages the latest and cutting-edge technologies, including Golang, to provide you with end-to-end development capabilities. Get in touch with us to know more.

Share On

Tags

Go development

go development services

Highlights

Download Blog

Talk to Our Experts

Get in Touch with us for a Walkthrough